This blog post is written by AI CDT student, Isabella Degen

A summary of Prof. Kerstin Eder’s talk on the well-established procedures and practices of verification and validation (V&V) and how they relate to AI algorithms. The objective is to inspire the readers to apply better V&V processes to their AI research.

Verification is the process used to gain confidence in the correctness of a system compared to its requirements and specifications. Validation is the process used to assess if the system behaves as intended in its target environment. A system can verify well, meaning it does what it was specified to do, and not validate well, meaning it does not behave as intended.

V&V are challenging for systems that fully or partially involve AI algorithms despite V&V being a well-established and formalised practice. Many AI algorithms are black boxes that offer no transparency about how the algorithm operates. They respond with multiple correct answers to similar or even the same input. AI algorithms are not deterministic by design. Ideally, they can handle new situations well without needing to be trained for all situations. Therefore, accurately and exhaustively listing all the requirements against which these algorithms need to be verified is practically impossible.

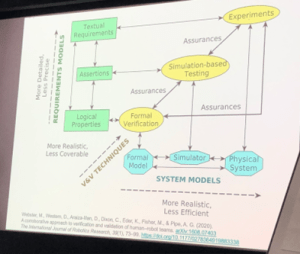

V&V methods for complex robotic systems like automated vehicles are well-established. Automated vehicles need to be capable of operating in an environment where unexpected situations occur. Various ISO standards (ISO 13485 – Medical Devices Quality Management, ISO 10218-1 – Robots and Robotic Devices, ISO 12207 – Systems and Software Engineering) describe different V&V practices required for software, systems and devices. These standards expect the use of multiple processes and practices to meet the required quality. No one practice covers the extent of V&V each practice has shortcomings. The three techniques for V&V are formal verification, simulation-based verification and experiments [3]. The image below arranges these techniques by how realistic and coverable they are, where coverability refers to how much of the system a technique can analyse [1].

The image shows the framework for corroborative V&V [1].

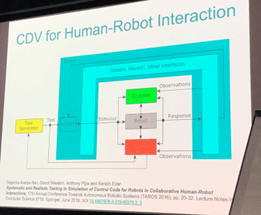

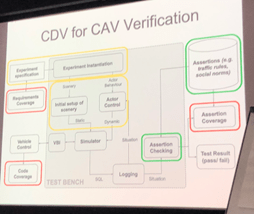

An approach for simulation-based testing is coverage-driven verification (CDV). A two-tiered test generation approach where abstract test sequences are computed first and then concretised has been shown to achieve a high level of automation [2]. It is important to note that coverage includes code coverage, structural coverage (e.g. employing Finite State Machines) and functional coverage (including requirements and situations).

The images show the CDV process (left) and its translation to an automated vehicle scenario (right) [2].

Belief-desire-intention (BDI) agents used as models can further generate tests. These agents achieve coverage that is higher or equivalent to model-checking automata. The BDI agents can emulate the agency present in Human-Robot Interactions. However, the cost of learning a belief set has to be considered [3]. Similarly, software testing agents can be used to generate tests for simulation-based automated vehicle verification. Such an agency-directed approach is robust and efficient. It generates twice as many effective tests compared to pseudo-random test generation. Moreover, these agents are encoded to behave naturally without compromising the effectiveness of test generation [4].

The hope is that inspired by these techniques used to test robotic systems we will promote V&V to first-class citizens when designing and implementing AI algorithms. V&V for AI algorithms requires innovation and a creative combination of existing techniques like intelligent agency-based test generation. The reward will be to increase trust in AI algorithms.

References:

[1] Webster, Matt, et al. “A corroborative approach to verification and validation of human–robot teams.” The International Journal of Robotics Research 39.1 (2020): 73-99. https://journals.sagepub.com/doi/full/10.1177/0278364919883338

[2] Araiza-Illan, Dejanira, et al. “Systematic and realistic testing in simulation of control code for robots in collaborative human-robot interactions.” Towards Autonomous Robotic Systems: 17th Annual Conference, TAROS 2016, Sheffield, UK, June 26–July 1, 2016, Proceedings 17. Springer International Publishing, 2016. https://link.springer.com/chapter/10.1007/978-3-319-40379-3_3

[3] Araiza-Illan, Dejanira, Anthony G. Pipe, and Kerstin Eder. “Model-based test generation for robotic software: Automata versus belief-desire-intention agents.” arXiv preprint arXiv:1609.08439 (2016). https://arxiv.org/abs/1609.08439

[4] Chance, Greg, et al. “An agency-directed approach to test generation for simulation-based autonomous vehicle verification.” 2020 IEEE International Conference On Artificial Intelligence Testing (AITest). IEEE, 2020. https://arxiv.org/abs/1912.05434