This review is written by CDT Student Oliver Deane.

Day 2 of the IAI CDT’s January Research Skills event included a diverse set of talks that introduced valuable strategies for conducting original and impactful research.

Unifiers and Diversifiers

Professor Gavin Brown, a lecturer at the University of Manchester, kicked things off with a captivating talk on a dichotomy of scientific styles: Unifying and Diversifying.

Calling upon a plethora of quotations and concepts from a range of philosophical figures, Prof. Brown contends that most sciences, and indeed scientists, are dominated by one of these styles or the other. He described how a Unifying researcher focuses on general principles, seeking out commonalities between concepts to construct all-encompassing explanations for phenomena, while a ‘Diversifier’ ventures into the nitty gritty, exploring the details of a task in search of novel solutions for specific problems. Indeed, as Prof. Brown explained, this fascinating dichotomy maintains science in a “dynamic equilibrium”; unifiers construct rounded explanations that are subsequently explored and challenged by diversifying thinkers. In turn, the resulting outcome fuels unifiers’ instinct to adapt initial explanations to account for the new evidence – and round and round we go.

Examples from the field

Prof. Brown proceeded to demonstrate these processes with example class members from the field. He identifies DeepMind founder, Demis Hassabis, as a textbook ‘Unifier’, utilizing a substantial knowledge of the broad research landscape to connect and combine ideas from different disciplines. Contrarily, Yann LeCun, master of the Convolutional Neural Network, falls comfortably into the ‘Diversifier’ category; he has a focused view of the landscape, specializing on a single concept to identify practical, previously unexplored, solutions.

Relevant Research Strategies

We were then encouraged to reflect upon our own research instincts and understand the degree to which we adopt each style. With this in mind, Prof. Brown introduced valuable strategies that permit the identification of novel and worthwhile research avenues. Unifiers can look under the hood of existing solutions, before building bridges across disciplines to identify alternative concepts that can be reconstructed and reapplied for the given problem domain. Diversifiers on the other hand should adopt a data centric point of view, challenging existing assumptions and, in doing so, altering their mindset to approach tasks from unconventional angles.

This fascinating exploration into the world of Unifiers and Diversifiers offered much food for thought, providing students practical insights that can be applied to our broad research methodologies, as well as our day-to-day studies.

Research Skills in Interactive AI

After a short break, a few familiar faces delved deeper into specific research skills relevant to the three core components of the IAI CDT: Data-driven AI, Knowledge-Driven AI, and Interactive AI.

Data-Driven AI

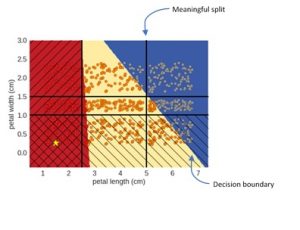

Professor Peter Flach began his talk by reframing data-driven research as a “design science”; one must analyze a problem, design a solution and build an artefact accordingly. As a result, the emphasis of the research process becomes creativity; researchers should approach problems by identifying novel perspectives and cultivating original solutions – perhaps by challenging some underlying assumptions made by existing methods. Peter proceeded to highlight the importance of the evaluation process in Machine Learning (ML) research, introducing some key Dos and Don’ts to guide scientific practices: DO formulate a hypothesis, DO expect an onerous debugging process, and DO prepare for mixed initial results. DON’T use too many evaluation metrics – select an appropriate metric given a hypothesis and stick with it. AVOID evaluating to favor one method over another to remove bias from the evaluation process; “it is not the Olympic Games of ML”.

Knowledge-Based AI

Next, Dr. Oliver Ray covered Knowledge -based AI, describing it as the bridge between weak and strong AI. He emphasized that knowledge-based AI is the backbone for building ethical models, permitting interpretability, explainability, and, perhaps most pertinent, interactivity. Oliver framed the talk in the context of the Hypothetico-deductive model, a description of scientific method in which we curate a falsifiable hypothesis before using it to explore why some outcome is not as expected.

Interactive AI

Finally, Dr. Paul Marshall took listeners on a whistle-stop tour of research methods in Interactive AI, focusing on scientific methods adopted by the field of Human-Computer Interaction (HCI). He pointed students towards formal research processes that have had success in HCI. Verplank’s Spiral, for example, takes researchers from ‘Hunch’ to ‘Hack’, guiding a path from idea, through design and prototype, all the way to a well-researched solution or artefact. Such practices are covered in more detail during a core module of the IAI training year: ‘Interactive Design’.

In all, this was a useful and engaging workshop that introduced a diverse set of research practices and perspectives that will prove invaluable tools during the PhD process.